Learning how to use LangGraph

Let's undestand LangGraph.

Recently, I developed an LLM service based on LangChain as part of a project. While building a Routing Chain and individual Business Chains using LangChain, I found myself needing features beyond simple LLM calls - such as tool usage, state management, conditional branching, and memory persistence. Although I’m still a beginner, I’ve already encountered challenges like exception handling, scenario-driven chain duplication, and increasing difficulty in debugging and maintenance as the system grows. Moving forward, since I need to invoke tools through AI Agents and handle various task complexities, I was in search of a solution and LangGraph seems to be the answer. I plan to cover it in more detail going forward.

LangGraph?

LangGraph is a library within the LangChain ecosystem that allows developers to define and execute the logical flow of LLM-based applications in the form of a graph.

Each node in the graph is a Runnable that performs a specific task (e.g., retrieving data, summarizing documents, invoking an LLM), and these nodes are connected by passing and updating a shared state. Agents themselves can also be nodes, serving as flexible units capable of calling tools and making decisions.

Unlike simple sequential execution, LangGraph excels at building complex workflows in a declarative and visual manner—supporting conditional branching, loops, stateful memory, and subgraphs. With its structure of nodes (points) and edges (lines), LangGraph models decision-based process flows by incorporating information about state and direction (conditional/static routing).

Ultimately, LangGraph functions as a modular and extensible workflow engine for LLM applications, enabling developers to represent complex operations as graphs—thereby reducing system complexity and greatly enhancing maintainability.

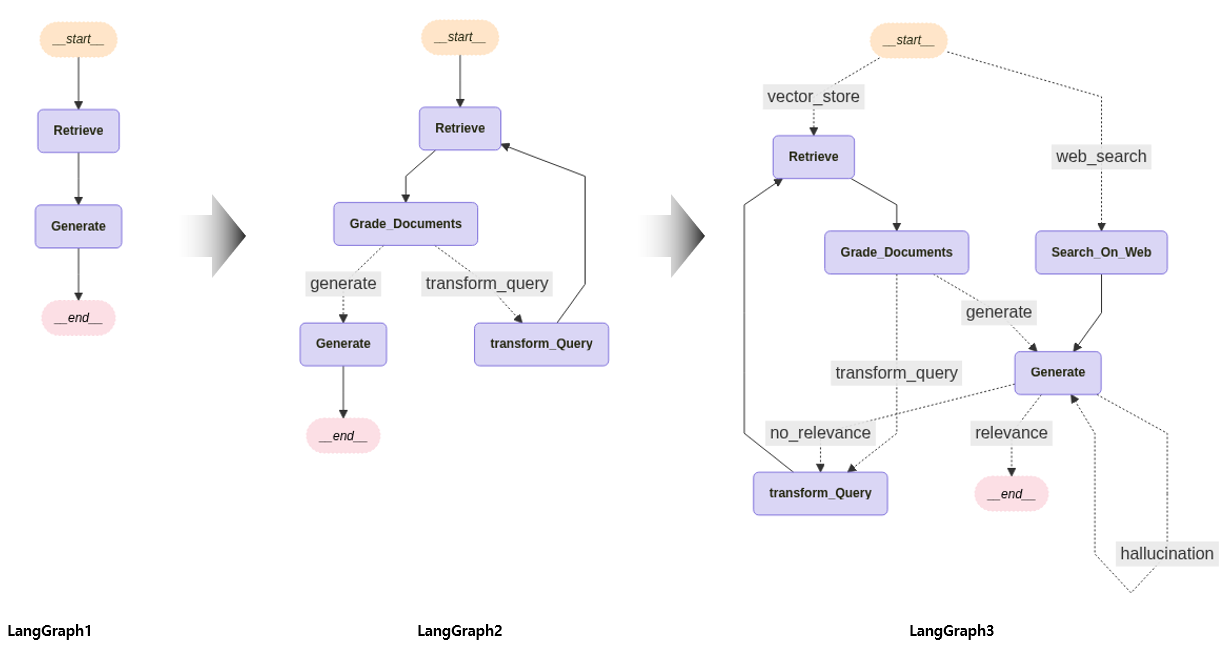

Exploring Various LLM Workflowgks with LangGraph

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

workflow = StateGraph(GraphState)

# Add nodes

workflow.add_node("Retrieve", retrieve)

workflow.add_node("Generate", llm_ollama_generate)

# Connect the nodes

workflow.add_edge("Retrieve", "Generate")

workflow.add_edge("Generate", END)

# Set the entry point

workflow.set_entry_point("Retrieve")

# Set up memory storage for checkpointing

memory = MemorySaver()

# Compile the graph

app = workflow.compile(checkpointer=memory)

# Visualize the graph

visualize_graph(app)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

workflow = StateGraph(GraphState)

# Add nodes

workflow.add_node("Retrieve", retrieve)

workflow.add_node("Grade_Documents", grade_documents)

workflow.add_node("Generate", llm_ollama_generate)

workflow.add_node("transform_Query", transform_query)

# Connect the nodes

workflow.add_edge("Retrieve", "Grade_Documents")

workflow.add_conditional_edges(

"Grade_Documents",

condition_to_generate,

{

"transform_query": "transform_Query",

"generate": "Generate",

},

)

workflow.add_edge("transform_Query", "Retrieve")

workflow.add_edge("Generate", END)

# Set the entry point

workflow.set_entry_point("Retrieve")

# Set up memory storage for checkpointing

memory = MemorySaver()

# Compile the graph

app = workflow.compile(checkpointer=memory)

# Visualize the graph

visualize_graph(app)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

workflow = StateGraph(GraphState)

# Add nodes

workflow.add_node("Search_On_Web", search_on_web)

workflow.add_node("Retrieve", retrieve)

workflow.add_node("Grade_Documents", grade_documents)

workflow.add_node("Generate", llm_ollama_generate)

workflow.add_node("transform_Query", transform_query)

# Connect the nodes

workflow.add_conditional_edges(

START,

condition_to_route_question,

{

"vector_store": "Retrieve",

"web_search": "Search_On_Web",

},

)

########## Retrieve ##########

workflow.add_edge("Retrieve", "Grade_Documents")

workflow.add_conditional_edges(

"Grade_Documents",

condition_to_generate,

{

"transform_query": "transform_Query",

"generate": "Generate",

},

)

workflow.add_edge("transform_Query", "Retrieve")

########## Web Search ##########

workflow.add_edge("Search_On_Web", "Generate")

workflow.add_conditional_edges(

"Generate",

condition_to_check_hallucination,

{

"hallucination": "Generate",

"relevance": END,

"no_relevance": "transform_Query",

},

)

# Set up memory storage for checkpointing

memory = MemorySaver()

# Compile the graph

app = workflow.compile(checkpointer=memory)

# Visualize the graph

visualize_graph(app)